Authors: Yokub Khalimov, Ilkhom Dzhamolov, Nurbonu Mamadikimzosa (Tajikistan), Bakdaulet Anarbaev (Kazakhstan), Aksana Zamirbekova (Kyrgyzstan)

Formally, a bot means a software application that runs automated tasks. For example, automated publication of posts and comments on social media, likes, reposts.

However, “bots” are also called fake profiles and accounts on social media, which are run by real people. They leave comments on the internet either for money, or under coercion.

At first, bots appeared in the commercial sector long before social media became popular, when laudatory comments about given products appeared on companies’ websites. Then, this technology was used by politicians.

Usually, bots become very active in advance of major political events. They often praise the authorities, leave comments under the posts of official accounts of politicians, under links to articles on information websites, and support those who criticise persona non grata.

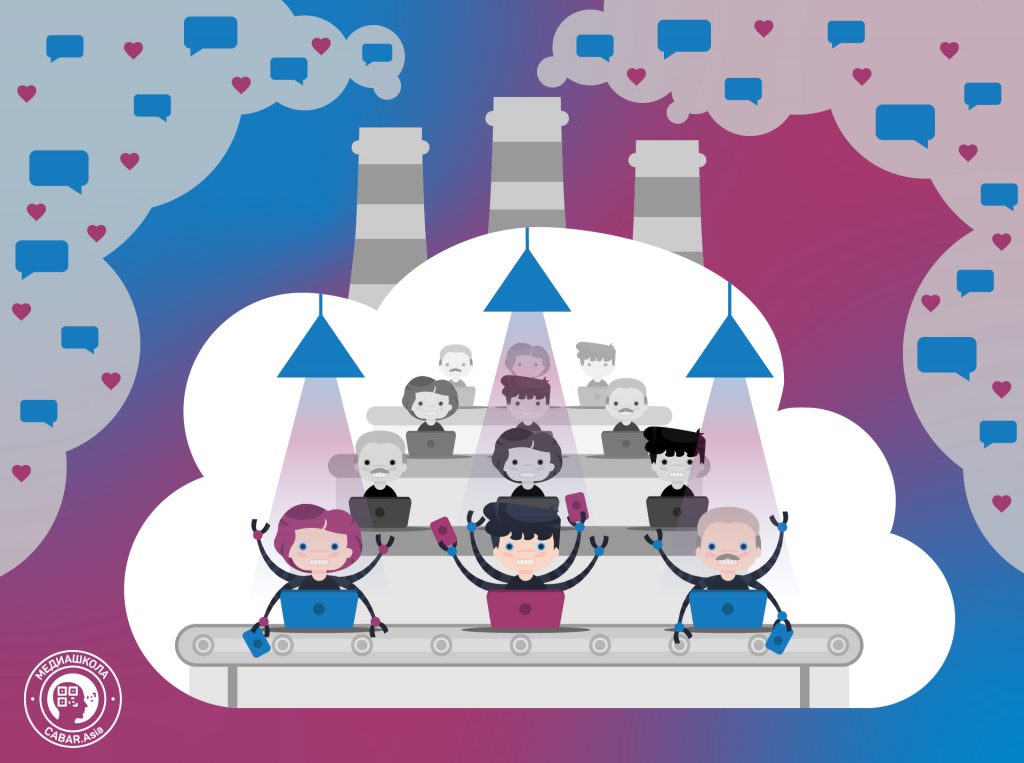

Usually, bots work together. This is not a single person, but full “farms” or “factories”, where every participant runs up to 20 fake accounts and leaves a few hundred comments every day. Thus, they can influence public opinion.

Bots attack specific people on the internet, spread fake news, discredit certain opinions. They create discussions between the bots, and make sponsored topics trending by mutual reposts and likes. Moreover, their task is to shift focus from the key topics by diverting the discussion away.

Bots don’t express their own opinion, but promote the interests of their customers. They can influence not only common users, but also politicians and journalists who would think that the majority of people think the same. Thus, bots create public opinion about a phenomenon or an event. They also shape the image of politicians, or criticise their opponents.

A “farm” or a “troll farm” is a centralised network of bots that can run as a private company, which works with customers, or as a branch of the politician’s or political party’s headquarters.

Yes, there are. In Kazakhstan, they are called “nur-bots” or “nur-fans”. The former praise the government and criticise the adversaries, while the latter leave positive comments about the domestic policy and activities of public authorities.

Factcheck.kz analysed a few dozens of fake accounts, which left comments on the page of the Foundation of the First President of Kazakhstan Nursultan Nazarbayev on social media. They found at least one company that created and ran the bots.

In Kyrgyzstan, the situation is similar, but bots are often called trolls or fakes.

In Tajikistan, bots are called the “Reply-generating farm” (“Fermai chavob”). Long before the social media became popular, every criticism of the authorities in newspapers was followed by a reply written by an anonymous person and published in the following newspaper issue. These publications didn’t specify the authors, and journalists called these articles the production of the “Reply-generating factory” (“Fermai chavob”). Gradually, the “Reply-generating factory” was renamed into the “Reply-generating farm”, but both options are used.

Now, bots in Tajikistan write comments to support the authorities on the internet. However, in this case, unlike other countries, this activity is forced and unpaid. Students and state employees are often involved in this activity.

The data of Uzbekistan are not available.

Just like everywhere, “farms”, “factories” and bots are used to manipulate public opinion and struggle against the dissidents on the internet.

Bots receive tasks from managers, who provide them with key topics to comment on. According to the analysis carried out by Factcheck.kz, running accounts in Kazakhstan is system-based and a good job in terms of marketing in social media.

“In fact, you won’t see similar comments. A copywriter or a team of copywriters seem to work with bot runners. Nevertheless, there are many same-type comments, which is due to the specific nature of a given network of bots.”

In fact, this is not so. It can take several months to several years to create a bot’s account. All this time, they fill the list of friends, post photos (usually, taken from real people’s accounts from other countries), publish links and memes to make the account look real.

Even verified accounts on social media can actually be fake ones. Thus, it’s very difficult sometimes to distinguish a bot from a real person.

The analytical platform VoxUkraine made a list of criteria that can be used to identify fake accounts:

- On average, bots write their comments 15 times faster than a real user.

- More than half of the bots have “comment buddies” – other fake accounts who leave comments under the same posts. Real people do that only one time out of eight (13%).

- Bots comment on politics four times more often than real users do.

- Bots have four times less friends than real users, on average.

- Only 4% of bots have at least one check-in in a certain location. For people, the average rate of check-ins is 44%.

- Bots have faces on their avatars only in 50%, people – in 92% cases.

- Only 20% of bots have comments under their own posts, in comparison to 80% with real people.

- Only 3% of people have no reactions under their avatars, while among bots this indicator is 43%.